I’ve been recently trying to play with Ubuntu Core on my 2nd Raspberry Pi 2. I like the concept of a minimalist host with atomic updates and the possibility to run my services inside containers. In addition, snap looks like an interesting package system (and more than that). But this setup does not fit my use cases, it is perfect for repeatable testing and safe environment for deployment. However I need a system that is stable and safe, but which I can tinker with (modify a specific configuration or kernel in order to optimise its use or detect new devices) and which grow organically (depending on my free time).

Introduction to Ubuntu Core

So Ubuntu Core (or the Project Atomic) do appeal to me but they are too restrictive for my use cases. I need more freedom. Anyway, for those of you who could be interested in these projects here is a quick review of these systems with respect to day-to-day use as I’m not going to explain the philosophy of Core/Atomic neither of snap/atomic technologies.

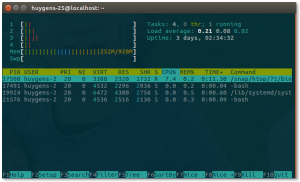

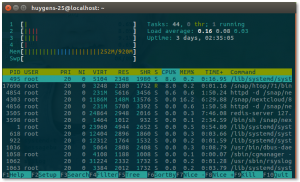

Both Ubuntu Core (armhf variant) and CentOS Atomic Host (x86_64 variant) felt a bit rough and despite carrying the respective name Ubuntu and CentOS I had to reconsider how I am used to administer such boxes. A basic concept is that you install a core (or minimalist) OS and you cannot pretty much change it (most parts are read only), but it should have everything to run containers. For Atomic Host, there is no way to install additional packages, you need to add every other bit of software as a container. The big difference with Ubuntu Core is that you have snaps which allows to extend the core OS without having to install and configure containers manually. A snap package – once installed – feels more or less like if you just installed a deb package. But there is a big difference, they are like little containers or sandboxed process(es) already neatly packaged so they feel like a normal command, but a lot is going on behind the scene. Here is an example, I’ve installed `htop` on my Raspberry Pi 2, now I get two distinct results if I run it with a standard user or with the super user rights, a behaviour uncommon on a standard Linux installation:

| Standard User | Super user |

|---|---|

As it is visible in the case of htop, by default when I run it, htop shows only processes where the owner is myself. This is not bad but it is different from traditional Linux distribution and so you need to get the habit to do `sudo htop` to see all processes, but take care you then run htop as super user, and htop allows you to kill processes, so be careful.

However, on Raspberry Pi (and probably other ARMv7 (aka armhf) platforms) there is a very limited amount of snap packages available yet. It is probably changing fast, but if you run today Ubuntu Core 16 you can’t install much snaps and need to rely on Docker containers for adding extra applications to the base system (more on that later, including its current limitations).

Basic system configuration absent

Let’s get back to the beginning and I mean by that right after the installation of Ubuntu Core upon first boot. I still had my keyboard and screen attached to it (and you need it in order to login with your Launchpad SSO login to create the first user). After the first boot, I was not offered the possibility to change the keyboard layout (I do not have a US layout) but at least on Ubuntu Core one can change it afterwards by editing the file `/etc/default/keyboard`, this feat is not possible on Atomic Host. Anyway, not such a big issue as I’m using my Raspberry Pi mostly (if not alway) via SSH then it does not matter anymore. Staying on the localization topic, both systems do not allow changing the locale (language, regional preferences, etc.). On Atomic Host it is set to US with the “peculiar” time and date format  no offense! Similar fate on Ubuntu Core but they are using the C locale (which sadly for me also uses the US date/time format). Although I would anyway stick to the English language, I don’t like the regional choice and I don’t like the lack of choices here.

no offense! Similar fate on Ubuntu Core but they are using the C locale (which sadly for me also uses the US date/time format). Although I would anyway stick to the English language, I don’t like the regional choice and I don’t like the lack of choices here.

So let’s move back to an SSH connection, at least the keyboard layout is no longer an issue. But here comes the next one  whenever I use SSH, the first thing I do is launch a new tmux session or attach to an existing one. I’m open to other solutions, so if the host only has screen or byobu, I’m also fine. However, Ubuntu Core for ARMv7 does not ship by default with any of them, nor are they any existing snap package. So there is no simple way (out of compiling from source and creating a snap package) to have safe SSH session.

whenever I use SSH, the first thing I do is launch a new tmux session or attach to an existing one. I’m open to other solutions, so if the host only has screen or byobu, I’m also fine. However, Ubuntu Core for ARMv7 does not ship by default with any of them, nor are they any existing snap package. So there is no simple way (out of compiling from source and creating a snap package) to have safe SSH session.

Docker

Normally thanks to container technology, it is possible to install more or less everything I would need and with some clever alias it would seem much like a snap installation. So I just wanted to create a Dockerfile which reference Alpine Linux and install tmux and build the container using Docker. Not possible with Ubuntu Core and the Docker snap. According to the Docker snap information, I should be creating a folder under `$HOME/apps/docker/1.6.xxx` (eventhough Docker 1.11.2 was installed, weird), but it does NOT work. The AppArmor profile installed with Docker snap denies it. I’ve tried many different folders to no avail. This is not a misconfiguration of Ubuntu Core but rather one of the Docker snap packager, but the end result is that as a user Ubuntu Core on armhf is barely usable (lack of too many essential tool, no snap and cannot easily use Docker).

Other rough edges with Docker are that your default user does not belong to the Docker group, so you need to sudo every single Docker command. There is also no way to add yourself to the Docker group (as the group is defined in the standard file `/etc/group` which is on a read-only filesystem). Your default user is also not a real traditional user, it is an “extra user” (did not know this subtlety before this) so there are a bunch of things that are not compatible with it like you cannot launch a container to run as yourself (docker run -u $(whoami) ...) as you are not a standard user (no entry in `/etc/passwd`), you are an extra users (see in `/var/lib/extrausers/passwd`), but at least it would be possible to do it specifying your user ID (e.g. 1000)! This is all logic but “rough”. A corollary to the previous statements is that you cannot run Docker in user namespaces mode (unprivileged Docker container) as you cannot add yourself to the Docker group.

At least the Docker snap includes Docker Compose, that is cool. On Atomic Host, Docker is installed but not Docker Compose. As the file system is also read-only there is good way to install Docker Compose in a central place, it should be run within a container. At least on Atomic Host, it is very easy (as on standard Linux OS) to create Dockerfile and build them. So these limitations (of not having Docker Compose and some other tools) can be overcome with some efforts.

Final Try

My next steps was to download some scripts and tools. However both curl and wget are absent of the base installation. Even git is not available as a snap or on the core installation. There is no way to build a Dockerfile, so in the end to have tmux, curl, git, etc. I had to create a lxd container running Ubuntu just to get a normal OS (or from my laptop, I could download the scripts and via scp pushing them to Ubuntu Core). But then I simply prefer to run Ubuntu Server or Raspbian on my Raspberry Pi.

For me I’ve lost too much time on this already. Ubuntu Core seems nice I really like the principles and approach, but it is definitively missing too many basic tools, too rough for my taste and available free time.

Conclusion

I will install Ubuntu Server or CentOS 7 for armhf on my second Raspberry Pi 2. Ubuntu Core is perhaps still a bit too young (maybe it is specific to the armhf platform) and requires too much work for my needs. At least this experience made me understand that I don’t want a lock down and safe box, but I need flexibility.

If you have a Synology NAS that supports BTRFS (mostly the intel based NASes) and that you decided to use BTRFS, there are a couple of shared folders automatically created for you (like the “homes” or “video”) but they don’t have the “compression” option set, and trying to edit the shared folder in the administration GUI does not help, the check box is grayed out, meaning it is not possible.

If you have a Synology NAS that supports BTRFS (mostly the intel based NASes) and that you decided to use BTRFS, there are a couple of shared folders automatically created for you (like the “homes” or “video”) but they don’t have the “compression” option set, and trying to edit the shared folder in the administration GUI does not help, the check box is grayed out, meaning it is not possible.

I bought a USB Wireless dongle from AVM called

I bought a USB Wireless dongle from AVM called

This guide should work for all Raspberry Pi supported version with an Ethernet interface (B, B+, 2, 3 and 3-B+). It could potentially work without an Ethernet interface if you have a WiFi USB dongle or a Raspberry Pi with an integrated WiFi which is supported out-of-the box by the RPi. I’ve tested the following on a Raspberry Pi 2 and a Raspberry Pi 3 B+. This guide assumes that you are doing all steps from a Unix machine (I’ve done them from Fedora Linux but it should work on any Linux, BSD or OS X with potential adaptation). It is possible to do it on Windows I suppose, but I don’t have Windows and can’t explain.

This guide should work for all Raspberry Pi supported version with an Ethernet interface (B, B+, 2, 3 and 3-B+). It could potentially work without an Ethernet interface if you have a WiFi USB dongle or a Raspberry Pi with an integrated WiFi which is supported out-of-the box by the RPi. I’ve tested the following on a Raspberry Pi 2 and a Raspberry Pi 3 B+. This guide assumes that you are doing all steps from a Unix machine (I’ve done them from Fedora Linux but it should work on any Linux, BSD or OS X with potential adaptation). It is possible to do it on Windows I suppose, but I don’t have Windows and can’t explain. There are already

There are already  For pre-systemd Raspbian releases (up to Wheezy, or Debian 7), you need to mount the second partition now, So create first a mount point and then mount the partition to it.

For pre-systemd Raspbian releases (up to Wheezy, or Debian 7), you need to mount the second partition now, So create first a mount point and then mount the partition to it. Optional: if you do not have an Ethernet interface and need to use WiFi, you need to add the WiFi configuration (your SSID – or WiFi network name – and your WiFi password). Assuming you have something like WPA2-PSK, you simply need to edit the file

Optional: if you do not have an Ethernet interface and need to use WiFi, you need to add the WiFi configuration (your SSID – or WiFi network name – and your WiFi password). Assuming you have something like WPA2-PSK, you simply need to edit the file