I have a (now old) HP microserver with 4 HDDs. I installed Ubuntu 14.04 (then in beta) on it on a quiet Sunday in February 2014. It is now running Ubuntu 16.04 and still working perfectly. However, I’m not sure what I thought on that Sunday more than 3 years ago. I had partitioned the 4 HDDs in a similar fashion each with a partition for /boot, one for swap and the last one for a BTRFS volume (with subvolumes to separate / from other spaces like /var or /home). My idea was to have the 4 partitions for /boot in RAID10 and the 4 ones for swap in RAID0. I realised today that I only used 2 partitions for /boot and configured them in RAID1, and only used 3 partitions for swap in RAID0.

I have a recurrent problem that because each partition for /boot was 256MB, therefore instead of having 512 (RAID10 with 4 devices) I ended up having only 256MB (RAID1), and that’s not much especially if you install the Ubuntu HWE (Hardware Enablement) kernels, then you quickly have problems with unattended-update failing to install security update because there is no space left on /boot, etc. It was becoming high maintenance and with 4 kids to attend I had to remediate that quickly.

But here is the magic with Linux, I did an online reshaping from RAID1 to RAID10 (via RAID0) and an online resizing of /boot (ext4). And in 15 minutes I went from 256MB problematic /boot to 512MB low maintenance one without rebooting!

That’s how I did it, and it will only work if you have mdadm 3.3+ (could work with 3.2.1+ but not tested) and a recent kernel (I had 4.10, but should have worked with the 4.4 shipped with Ubuntu 16.04 and probably older Kernel). Note that you should backup, test your backup and know how to recover your /boot (or whatever partition you are trying to change).

Increasing the size a RAID0 array (for swap)

First this is how I fixed the RAID0 for the swap (no backup necessary, but you should make sure that you have enough free space to release the swap). The current RAID0 is called md0 and is composed of sda3, sdb3 and sdc3. The partition sdd3 is missing.

$ sudo mdadm --grow /dev/md0 --raid-devices=4 --add /dev/sdd3

mdadm: level of /dev/md0 changed to raid4

mdadm: added /dev/sdd3

mdadm: Need to backup 6144K of critical section..

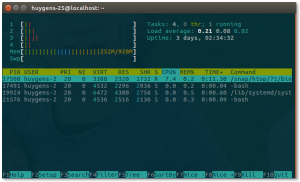

$ cat /proc/mdstat

md0 : active raid4 sdd3[4] sdc3[2] sda3[0] sdb3[1]

17576448 blocks super 1.2 level 4, 512k chunk, algorithm 5 [5/4] [UUU__]

[>....................] reshape = 1.8% (105660/5858816) finish=4.6min speed=20722K/sec

$ sudo swapoff /dev/md0

$ grep swap /etc/fstab

UUID=2863a135-946b-4876-8458-454cec3f620e none swap sw 0 0

$ sudo mkswap -L swap -U 2863a135-946b-4876-8458-454cec3f620e /dev/md0

$ sudo swapon -a

What I just did is tell MD that I need to grow the array from 3 to 4 devices and add the new device. After that, one can see that the reshape is taking place (it was rather fast because the partitions were small, only 256MB). After that first operation, the array is bigger but the swap size is still the same. So I “unmounted” or turn off the swap, recreated it using the full device and “remounted” it. I grepped for the swap in my `/etc/fstab` file in order to see how it was mounted, here it is using the UUID. So when formatting I reused the same UUID so I did not need to change my `/etc/fstab`.

Converting a RAID1 to RAID10 array online (without copying the data)

Now a bit more complex. I want to migrate the array from RAID1 to RAID10 online. There is no direct path for that, so we need to go via RAID0. You should note that RAID0 is very dangerous, so you should really backup as advised earlier.

Converting from RAID1 to RAID0 online

The current RAID1 array is called m1 and is composed of sdb2 and sdc2. I’m going to convert it to a RAID0. After the conversion, only one disk will belong to the array.

$ sudo mdadm --grow /dev/md1 --level=0 --backup-file=/home/backup-md0

$ cat /proc/mdstat

md1 : active raid0 sdc2[1]

249728 blocks super 1.2 64k chunks

$ sudo mdadm --misc --detail /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Sun Feb 9 15:13:33 2014

Raid Level : raid0

Array Size : 249664 (243.85 MiB 255.66 MB)

Raid Devices : 1

Total Devices : 1

Persistence : Superblock is persistent

Update Time : Tue Jul 25 19:27:56 2017

State : clean

Active Devices : 1

Working Devices : 1

Failed Devices : 0

Spare Devices : 0

Chunk Size : 64K

Name : jupiter:1 (local to host jupiter)

UUID : b95b33c4:26ad8f39:950e870c:03a3e87c

Events : 68

Number Major Minor RaidDevice State

1 8 34 0 active sync /dev/sdc2

I printed some extra information on the array to illustrate that it is still the same array but in RAID0 and with only 1 disk.

Converting from RAID0 to RAID10 online

$ sudo mdadm --grow /dev/md1 --level=10 --backup-file=/home/backup-md0 --raid-devices=4 --add /dev/sda2 /dev/sdb2 /dev/sdd2

mdadm: level of /dev/md1 changed to raid10

mdadm: added /dev/sda2

mdadm: added /dev/sdb2

mdadm: added /dev/sdd2

raid_disks for /dev/md1 set to 5

$ cat /proc/mdstat

md1 : active raid10 sdd2[4] sdb2[3](S) sda2[2](S) sdc2[1]

249728 blocks super 1.2 2 near-copies [2/2] [UU]

$ sudo mdadm --misc --detail /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Sun Feb 9 15:13:33 2014

Raid Level : raid10

Array Size : 249664 (243.85 MiB 255.66 MB)

Used Dev Size : 249728 (243.92 MiB 255.72 MB)

Raid Devices : 2

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Tue Jul 25 19:29:10 2017

State : clean

Active Devices : 2

Working Devices : 4

Failed Devices : 0

Spare Devices : 2

Layout : near=2

Chunk Size : 64K

Name : jupiter:1 (local to host jupiter)

UUID : b95b33c4:26ad8f39:950e870c:03a3e87c

Events : 91

Number Major Minor RaidDevice State

1 8 34 0 active sync set-A /dev/sdc2

4 8 50 1 active sync set-B /dev/sdd2

2 8 2 - spare /dev/sda2

3 8 18 - spare /dev/sdb2

As the result of the conversion, we are in RAID10 but with only 2 devices and 2 spares. We need to tell MD to use the 2 spares as well if not we just have a RAID1 named differently.

$ sudo mdadm --grow /dev/md1 --raid-devices=4

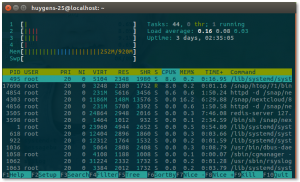

$ cat /proc/mdstat

md1 : active raid10 sdd2[4] sdb2[3] sda2[2] sdc2[1]

249728 blocks super 1.2 64K chunks 2 near-copies [4/4] [UUUU]

[=============>.......] reshape = 68.0% (170048/249728) finish=0.0min speed=28341K/sec

$ sudo mdadm --misc --detail /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Sun Feb 9 15:13:33 2014

Raid Level : raid10

Array Size : 499456 (487.83 MiB 511.44 MB)

Used Dev Size : 249728 (243.92 MiB 255.72 MB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Tue Jul 25 19:29:59 2017

State : clean, resyncing

Active Devices : 4

Working Devices : 4

Failed Devices : 0

Spare Devices : 0

Layout : near=2

Chunk Size : 64K

Resync Status : 99% complete

Name : jupiter:1 (local to host jupiter)

UUID : b95b33c4:26ad8f39:950e870c:03a3e87c

Events : 111

Number Major Minor RaidDevice State

1 8 34 0 active sync set-A /dev/sdc2

4 8 50 1 active sync set-B /dev/sdd2

3 8 18 2 active sync set-A /dev/sdb2

2 8 2 3 active sync set-B /dev/sda2

Once again, the reshape is very fast but this is due to the small size of the array. Here what we can see is that the array is now 512MB but only 256MB are used. Next step is to increase the file system size.

Increasing file system to use full RAID10 array size online

This cannot be done online with all file systems. But I’ve tested it with XFS or ext4 and it works perfectly. I suspect other file systems support that too, but I never tried it online. In all cases, as already advised, make a backup before continuing.

$ sudo resize2fs /dev/md1 resize2fs 1.42.13 (17-May-2015) Filesystem at /dev/md1 is mounted on /boot; on-line resizing required old_desc_blocks = 1, new_desc_blocks = 2 The filesystem on /dev/md1 is now 499456 (1k) blocks long. $ df -Th /boot/ Filesystem Type Size Used Avail Use% Mounted on /dev/md1 ext4 469M 155M 303M 34% /boot

When changing the /boot array, do not forget GRUB

I already had a RAID array before. So the Grub configuration is correct and does not need to be changed. But if you reshaped your array from something different than RAID1 (e.g. RAID5), then you should update Grub because it is possible that you need different module for the initial boot steps. On Ubuntu run `sudo update-grub`, on other platform see `man grub-mkconfig` on how to do it (e.g. `sudo grub-mkconfig -o /boot/grub/grub.cfg`).

It is not enough to have the right Grub configuration. You need to make sure that the GRUB bootloader is installed on all HDDs.

$ sudo grub-install /dev/sdX # Example: sudo grub-install /dev/sda

This article will describes the steps to install Ubuntu Server 16.04 on a Raspberry Pi 2. This article provides extra steps so that no screen or keyboard are required on the Raspberry Pi, it will be headless. But of course you need a screen and keyboard on the computer on which you will download the image and write it to the MicroSD card. It is similar to a previous article about

This article will describes the steps to install Ubuntu Server 16.04 on a Raspberry Pi 2. This article provides extra steps so that no screen or keyboard are required on the Raspberry Pi, it will be headless. But of course you need a screen and keyboard on the computer on which you will download the image and write it to the MicroSD card. It is similar to a previous article about  Grab your

Grab your

no offense! Similar fate on Ubuntu Core but they are using the C locale (which sadly for me also uses the US date/time format). Although I would anyway stick to the English language, I don’t like the regional choice and I don’t like the lack of choices here.

no offense! Similar fate on Ubuntu Core but they are using the C locale (which sadly for me also uses the US date/time format). Although I would anyway stick to the English language, I don’t like the regional choice and I don’t like the lack of choices here.

If you have a Synology NAS that supports BTRFS (mostly the intel based NASes) and that you decided to use BTRFS, there are a couple of shared folders automatically created for you (like the “homes” or “video”) but they don’t have the “compression” option set, and trying to edit the shared folder in the administration GUI does not help, the check box is grayed out, meaning it is not possible.

If you have a Synology NAS that supports BTRFS (mostly the intel based NASes) and that you decided to use BTRFS, there are a couple of shared folders automatically created for you (like the “homes” or “video”) but they don’t have the “compression” option set, and trying to edit the shared folder in the administration GUI does not help, the check box is grayed out, meaning it is not possible.

I bought a USB Wireless dongle from AVM called

I bought a USB Wireless dongle from AVM called