Two years ago I was publishing a post to build docker-compose on an ARM machine. Nowadays, you can find docker-compose on PyPI. However, if you intent to run docker-compose on a platform without Python dependencies, you might still be interested in my guide which generates an ELF binary executable.

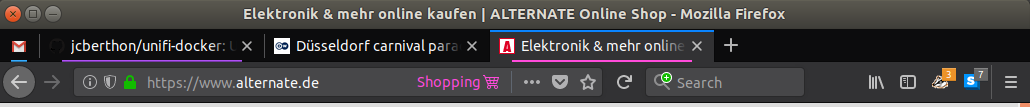

My previous guide has worked well until release 1.22.0 after which the Dockerfile.armhf (which was merged) has been upgraded to match the changes for the X86-64 platform but broke my build instructions. The builds seems to work and generate an executable but it fails to run due to missing dependencies:

+ dist/docker-compose-Linux-armv7l version

[446] Failed to execute script docker-compose

Traceback (most recent call last):

File "bin/docker-compose", line 5, in

from compose.cli.main import main

File "/code/.tox/py36/lib/python3.6/site-packages/PyInstaller/loader/pyimod03_importers.py", line 627, in exec_module

exec(bytecode, module.dict)

File "compose/cli/main.py", line 13, in

from distutils.spawn import find_executable

ModuleNotFoundError: No module named 'distutils'

I have not found the root-cause of the problem as I am not familiar with tox, but it looks like a configuration problem of that tool. So I decided to simply use Python3 built-in virtualenv.

As in my previous guide, you need to clone the repository and choose a branch. You can take the release branch or a specific version branch (e.g. bump-1.23.2).

$ git clone https://github.com/docker/compose.git

$ cd compose

$ git checkout bump-1.23.2

The two next shell commands should modify the original build script to use virtualenv and to add the missing dependencies (which are correctly installed in the tox environment but would be missing in ours).

$ sed -i -e 's:^VENV=/code/.tox/py36:VENV=/code/.venv; python3 -m venv $VENV:' script/build/linux-entrypoint

$ sed -i -e '/requirements-build.txt/ i $VENV/bin/pip install -q -r requirements.txt' script/build/linux-entrypoint

Now you can follow the exact same steps as in the previous guide. In summary:

$ docker build -t docker-compose:armhf -f Dockerfile.armhf .

$ docker run --rm --entrypoint="script/build/linux-entrypoint" -v $(pwd)/dist:/code/dist -v $(pwd)/.git:/code/.git "docker-compose:armhf"

$ sudo cp dist/docker-compose-Linux-armv7l /usr/local/bin/docker-compose

$ sudo chown root:root /usr/local/bin/docker-compose

$ sudo chmod 0755 /usr/local/bin/docker-compose

$ docker-compose version

docker-compose version 1.23.2, build 1110ad01

docker-py version: 3.6.0

CPython version: 3.6.8

OpenSSL version: OpenSSL 1.1.0j 20 Nov 2018

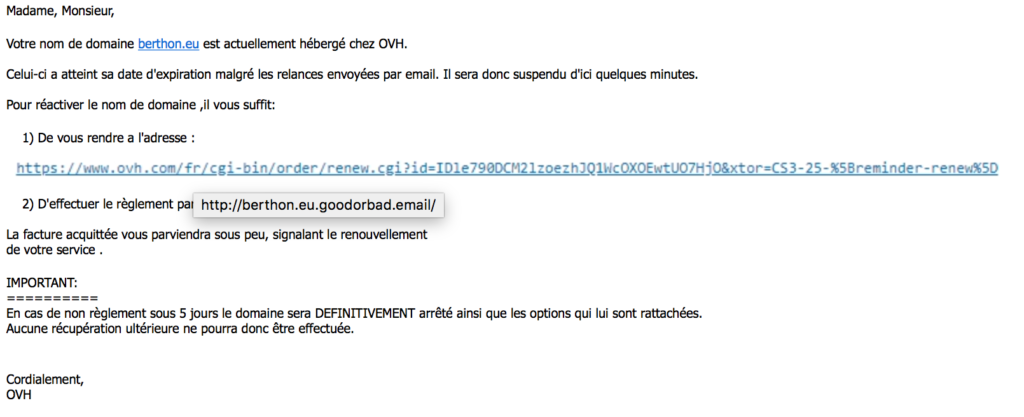

today at yet another phishing attempt.

today at yet another phishing attempt.

– you are used to create application that will run in the background (as a service) under a dedicated user and for which you need to handle the permissions for the data this application might need to use. So introducing containers (without user namespaces) should not bring any surprise here, it is part of the expectations. But you will see later that you can still be bitten by some edge cases from the container implementation.

– you are used to create application that will run in the background (as a service) under a dedicated user and for which you need to handle the permissions for the data this application might need to use. So introducing containers (without user namespaces) should not bring any surprise here, it is part of the expectations. But you will see later that you can still be bitten by some edge cases from the container implementation.