This article will describes the steps to install Ubuntu Server 16.04 on a Raspberry Pi 2. This article provides extra steps so that no screen or keyboard are required on the Raspberry Pi, it will be headless. But of course you need a screen and keyboard on the computer on which you will download the image and write it to the MicroSD card. It is similar to a previous article about installing Debian on Raspberry Pi 2, also headless mode.

This article will describes the steps to install Ubuntu Server 16.04 on a Raspberry Pi 2. This article provides extra steps so that no screen or keyboard are required on the Raspberry Pi, it will be headless. But of course you need a screen and keyboard on the computer on which you will download the image and write it to the MicroSD card. It is similar to a previous article about installing Debian on Raspberry Pi 2, also headless mode.

Disclaimer: you need to know a minimum about computer, operating system, Linux and Raspberry Pi. If you just want to install an Operating System on your Raspberry Pi, get NOOBS the Raspberry Pi Foundation installer. This guide is for more advanced users. If you follow this guide but do mistakes, you might wipe out disk content or could even brick Micro SD card or what not.

Known limitations: This guide will not work for Raspberry Pi 3 (unless you follow these extra steps to boot the Ubuntu Server raspi2 image on a Raspberry Pi 3) and currently cannot work easily on Raspberry Pi 3 B+ because there are no specific DTB on Ubuntu (Linux kernel device tree blob, although some people on Fedora 28 beta are successful by simply renaming the DTB from the Raspberry Pi 3 model) and one need a new uboot for this model (which in the Ubuntu Server images is an “older” version not currently supporting the new 3 B+ model, and even the Raspberry Pi 2 image for Bionic Beaver, the current development version which will become Ubuntu 18.03, does not support it yet).

Install the Ubuntu Server image

Grab your official Ubuntu Server for Raspberry Pi 2 image (the latest version at time of writing is

Grab your official Ubuntu Server for Raspberry Pi 2 image (the latest version at time of writing is ubuntu-16.04.4-preinstalled-server-armhf+raspi2.img.xz but in a few days the image for Ubuntu 16.04.4 should be available, it will save you some time when upgrading it (and save some write cycles on your Micro SD card). Once downloaded, you need to insert the Micro SD card on your computer (you probably need a USB card reader for that) and try to figure out which device it corresponds to, see the Ubuntu documentation for further guidance. I assume you know what you do but be weary that the next command if done on the wrong device could wipe out the data on that device. I do not take any responsibility if things go wrong.

$ xzcat ubuntu-16.04.4-preinstalled-server-armhf+raspi2.img.xz | dd of=<device> bs=4M oflag=dsync status=progress

Create a user account and allow SSH access

Then make sure to sync your media data and then mount the newly created partition (normally there are 2 partitions created, we are interested in the second one, it should be named <device>p2 or <device>2:

$ sync $ sudo mkdir -p /mnt/rpi $ sudo mount <device>2 /mnt/rpi

User account creation

As the Raspberry Pi uses an ARM processor and the computer on which I created the Micro SD card is a x86_64 processor, I cannot simply chroot and execute adduser in the newly mounted partition. The programs are compiled for a different architecture. So to add a new user we will need to do it manually by editing system files. We will create a new user and group, then add the corresponding entries in the files where the passwords are kept.

Add a new user (replace $(whoami) by your username if you want a different username than your current one).

$ echo "$(whoami):x:1000:1000:<Full Name>:/home/$(whoami):/bin/bash" | sudo tee -a /mnt/rpi/etc/passwd

Now create your group by editing /mnt/rpi/etc/group:

$ echo "$(whoami):x:1000:"" | sudo tee -a /mnt/rpi/etc/group

Now edit the group password database:

$ echo "$(whoami):*::$(whoami)" | sudo tee -a /mnt/rpi/etc/gshadow

And the user passsword database (it will have no default password but allow SSH key base authentication over the network and it will request to set a password upon first login. Note that with this configuration remote SSH login cannot happen without the SSH key, so it is a secure configuration):

$ echo "$(whoami)::0:0:99999:7:::" | sudo tee -a /mnt/rpi/etc/shadow

Grant your user access to administrative tasks (via sudo), but still requires that the user enter his own password:

$ echo "$(whoami) ALL=(ALL) ALL" | sudo tee /mnt/rpi/etc/sudoers.d/20_$(whoami)_superuser

User home folder and SSH access

Now we shall create the user’s home and add the SSH public key so we can login (it is assumed that you have a public RSA key under your home directory named ~/.ssh/id_rsa.pub change the name if it’s different):

$ sudo cp -R /mnt/rpi/etc/skel /mnt/rpi/home/$(whoami) $ sudo chmod 0750 /mnt/rpi/home/$(whoami) $ sudo mkdir -m 0700 /mnt/rpi/home/$(whoami)/.ssh $ cat ~/.ssh/id_rsa.pub | sudo tee -a /mnt/rpi/home/$(whoami)/.ssh/authorized_keys $ sudo chmod 0600 /mnt/rpi/home/$(whoami)/.ssh/authorized_keys $ sudo chown -R 1000:1000 /mnt/rpi/home/$(whoami)

Setup Systemd for enabling SSH access and headless mode

Normally everything else should be correctly setup. However you might want to have a look at systemd configuration, mostly of interests are which default target is in use (for headless you want multi-user.target) and if the SSH service is part of the default target. What I did was the following (it also avoid creating the ubuntu user):

$ cd /mnt/rpi/lib/systemd/system $ rm -f default.target $ ln -s multi-user.target default.target $ cd /mnt/rpi/etc/systemd/system/multi-user.target.wants $ ln -s /lib/systemd/system/ssh.service ssh.service

(if the last command fails because the file already exist then it is all OK)

Start Ubuntu Server on Raspberry Pi 2

Now unmount the card and eject it: sudo umount /mnt/rpi. You can now safely insert the card in your Raspberry Pi 2 and boot it. It boots slower than with Raspbian, so be patient. Note that with all the above configuration, you do not need to boot with a keyboard or screen attached to your Raspberry Pi. Only an Ethernet cable and the power plug are necessary.

Now you need to find your newly installed Ubuntu Server on your network, the default hostname is ubuntu so you could always start with that (ssh $(whoami)@ubuntu) if it is not in conflict with another device of yours and if your router is clever enough to have updated the DNS resolver. Or else you need to scan your network for it. To scan your network you need to know your subnet (e.g. 192.168.1.0 with a netmask of 255.255.255.0) and have nmap installed on your computer (sudo dnf install nmap will work for Fedora, and it is as easy for Debian/Ubuntu-based distros as well, just replace sudo apt-get install nmap).

$ sudo nmap -sP 192.168.1.0/24

Of course you need to adapt the above command to your subnet. The “/24” part is the netmask equivalent of 255.255.255.0. I recommend running the above command with sudo because it will display the MAC address of all the discovered devices which will help you spot your Raspberry Pi as nmap is displaying the vendor attached to each MAC address. See for yourself in the example output:

Starting Nmap 6.47 ( http://nmap.org ) at 2015-07-19 20:12 CEST (...) Nmap scan report for ubuntu.lan (192.168.1.9) Host is up (0.0060s latency). MAC Address: B8:27:EB:1E:42:18 (Raspberry Pi Foundation) (...) Nmap done: 256 IP addresses (8 hosts up) scanned in 2.05 seconds

Now you can simply connect to your RPi using SSH:

ssh $(whoami)@192.168.1.9 Enter passphrase for key '~/.ssh/id_rsa': You are required to change your password immediately (root enforced) Welcome to Ubuntu 16.04.1 LTS (GNU/Linux 4.4.0-1017-raspi2 armv7l) (...) 142 packages can be updated. 69 updates are security updates. (...) WARNING: Your password has expired. You must change your password now and login again! (current) UNIX password:

Now that you are authenticated and have access to your newly installed Ubuntu Server, it is time to upgrade it.

Upgrade Ubuntu Server to latest packages

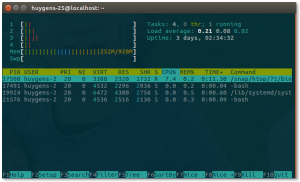

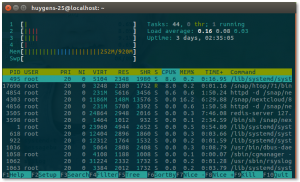

The tool tmux should already be installed on your system (or do sudo apt install tmux), so use it to create a new session, so even if you get a network problem your session is not killed (simply do tmux attach)

$ tmux $ sudo apt dist-upgrade $ sudo systemctl reboot

Note: it is possible that unattended-upgrade kicks in before you can do the upgrade manually. Then wait an hour or more (depending on the speed of your internet connection and Micro SD card mainly) before doing the above steps. It is still worth while as the dist-upgrade command will perform more thorough upgrade (potentially removing deprecated packages or even downgrading some if necessary) but you will be in sync with the latest and greatest Ubuntu Server.

no offense! Similar fate on Ubuntu Core but they are using the C locale (which sadly for me also uses the US date/time format). Although I would anyway stick to the English language, I don’t like the regional choice and I don’t like the lack of choices here.

no offense! Similar fate on Ubuntu Core but they are using the C locale (which sadly for me also uses the US date/time format). Although I would anyway stick to the English language, I don’t like the regional choice and I don’t like the lack of choices here.

If you have a Synology NAS that supports BTRFS (mostly the intel based NASes) and that you decided to use BTRFS, there are a couple of shared folders automatically created for you (like the “homes” or “video”) but they don’t have the “compression” option set, and trying to edit the shared folder in the administration GUI does not help, the check box is grayed out, meaning it is not possible.

If you have a Synology NAS that supports BTRFS (mostly the intel based NASes) and that you decided to use BTRFS, there are a couple of shared folders automatically created for you (like the “homes” or “video”) but they don’t have the “compression” option set, and trying to edit the shared folder in the administration GUI does not help, the check box is grayed out, meaning it is not possible.

I bought a USB Wireless dongle from AVM called

I bought a USB Wireless dongle from AVM called