There is a subject which seems to be completely abstruse to many users of containers on Linux, it is about sharing data between a host and a container or between containers.

I do think that solving this problem is not much different than it is without containers on Linux and on Unix. From my perspective, there is no much difference between managing file permissions with or without containers, the big change for me is the introduction of namespaces, especially the user namespaces.

So what is exactly the problem? And where does it come from?

The problem is that when running a process within a container, that process will run with a certain user and group ID (respectively UID and GID) and that those IDs might differ from the ones of the caller (the user creating and running the container), this might not be obvious. This is especially true with container technologies like Docker which by default will run the process within the container as root (unless overridden in the Dockerfile or command line) when any user with write access to the Docker socket can create such container. So you have by default a discrepancy for the UID and GID between the caller – probably a standard user – and a random Docker container.

In traditional Unix / Linux, this is “normal” or “expected” behaviour. You usually cannot run a process as root from your normal user unless you use sudo or a setuid program, so usually you do not have the problem that a program you launch might have different UID/GID than your own user. And when you use a program with sudo you understand that this might become a problem, so if you use sudo to run `tcpdump -w net-trace.pcap` you know the file net-trace.pcap will be owned by root and that you might not be able to access or delete it. This reflex needs to apply to running a container as well.

When you have done Unix/Linux development most of your career – and that you have adopted the principle of least privileges … I still know of few people only using the root account  – you are used to create application that will run in the background (as a service) under a dedicated user and for which you need to handle the permissions for the data this application might need to use. So introducing containers (without user namespaces) should not bring any surprise here, it is part of the expectations. But you will see later that you can still be bitten by some edge cases from the container implementation.

– you are used to create application that will run in the background (as a service) under a dedicated user and for which you need to handle the permissions for the data this application might need to use. So introducing containers (without user namespaces) should not bring any surprise here, it is part of the expectations. But you will see later that you can still be bitten by some edge cases from the container implementation.

So, let us see how to fix this problem of User/Group ID and file permissions. Note that the solution would be similar if you would use containers or not, and applies to all container implementations (e.g. LXC, Docker, etc.). Then, for everyone, we will see how to handle file permissions when using user namespaces (hint, the principles are the same, but it requires a few extra steps to understand what will be the effective UID/GID). Finally, in the case of Docker, we will see a few edge cases where you can still get off guard with respect to file permissions and volume declaration inside a Dockerfile.

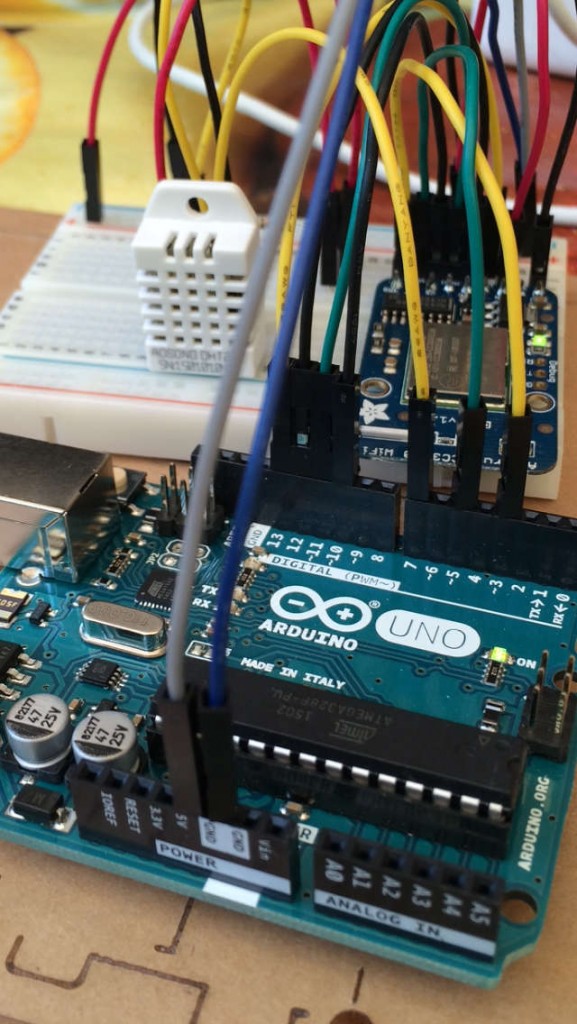

As I’m trying to prototype some sensors which I will then use around my home to monitor events and perhaps also react on them, I’ve been a bit more looking at the

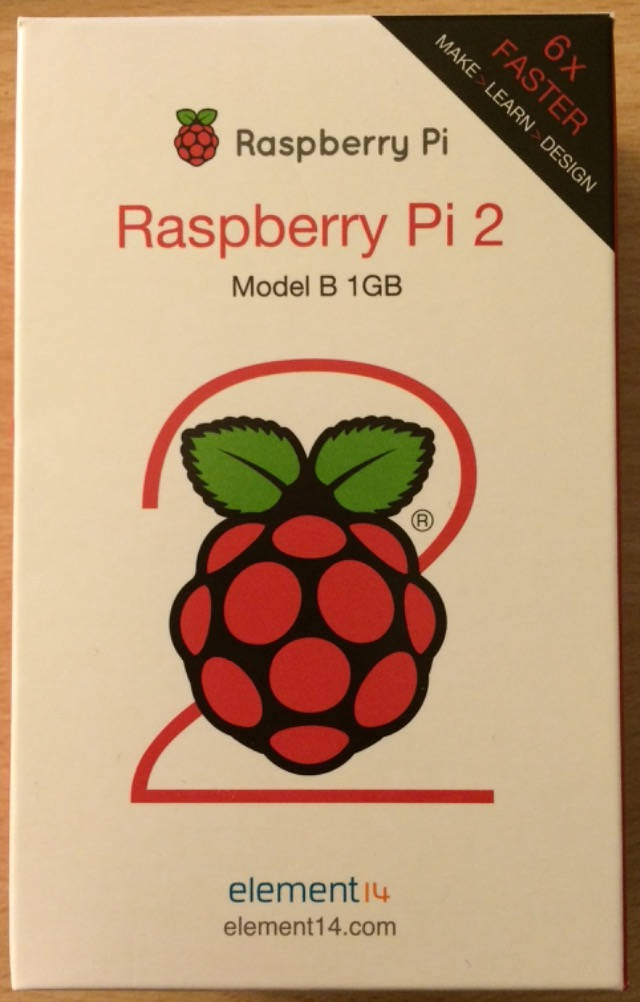

As I’m trying to prototype some sensors which I will then use around my home to monitor events and perhaps also react on them, I’ve been a bit more looking at the  I therefore think a device as simple as a Raspberry Pi 2 is perfectly suited to be the core element of a Home Automation system. It has enough processing power, storage capacity, interfaces capabilities to be the host of all the gathering of monitored data, their processing and analysis, and of all actuators. And it can easily use internet services (if need be) thanks to its network interface.

I therefore think a device as simple as a Raspberry Pi 2 is perfectly suited to be the core element of a Home Automation system. It has enough processing power, storage capacity, interfaces capabilities to be the host of all the gathering of monitored data, their processing and analysis, and of all actuators. And it can easily use internet services (if need be) thanks to its network interface. So I did not technically buy it, true, but I own it! You can see a screenshot on the right-end side.

So I did not technically buy it, true, but I own it! You can see a screenshot on the right-end side.